For a customer, I deployed a new vRealize Log Insight 8.10 cluster as a test. After initial installation and configuration of the first vRLI node I experienced a performance that was particularly bad. It wasn’t always this bad, but it frequently occurred.

The bad performance initially revealed itself when choosing one of the options in the menu on the left side of the screen. It seemed like the whole screen was frozen. After 10 till 30 seconds the requested information appeared.

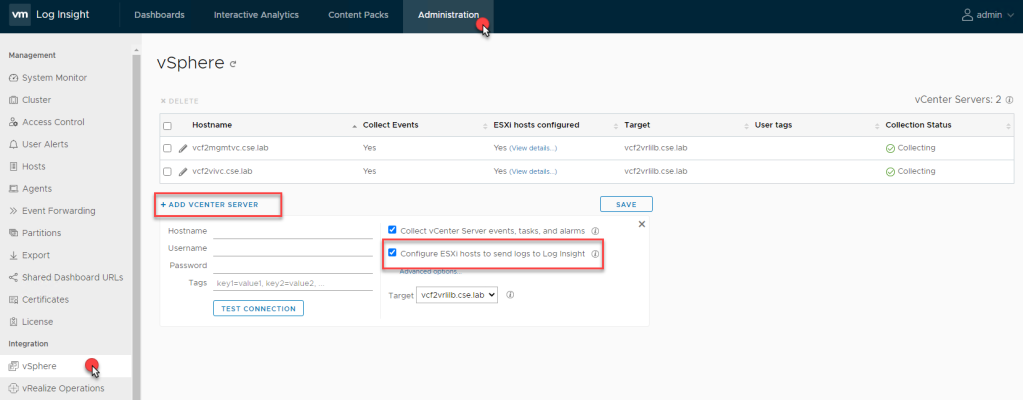

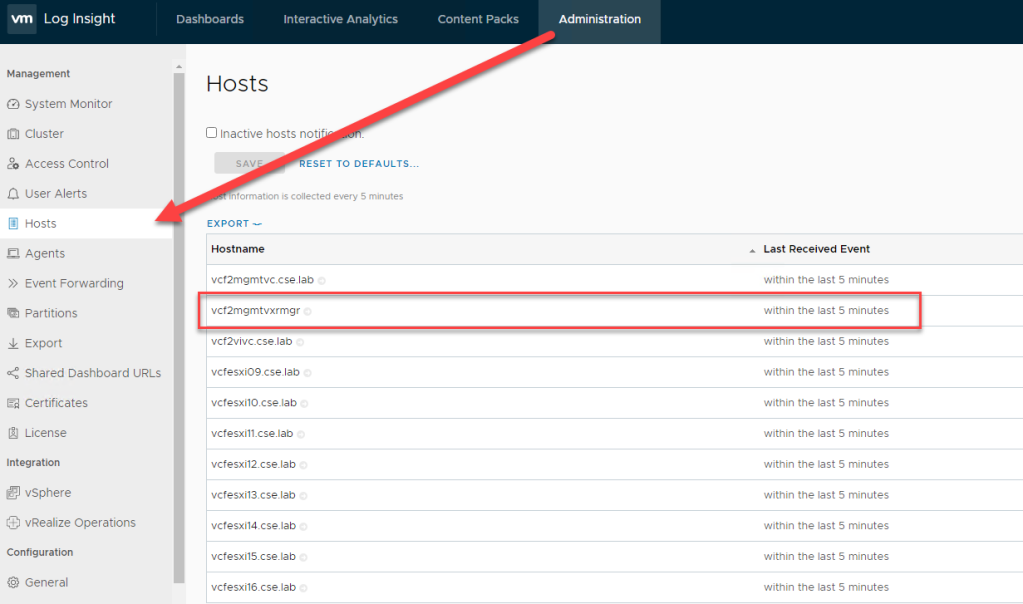

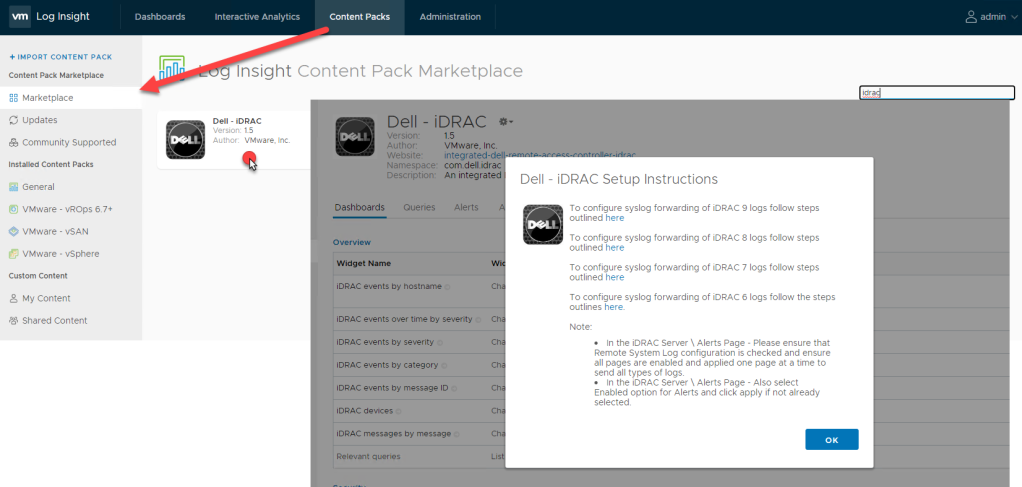

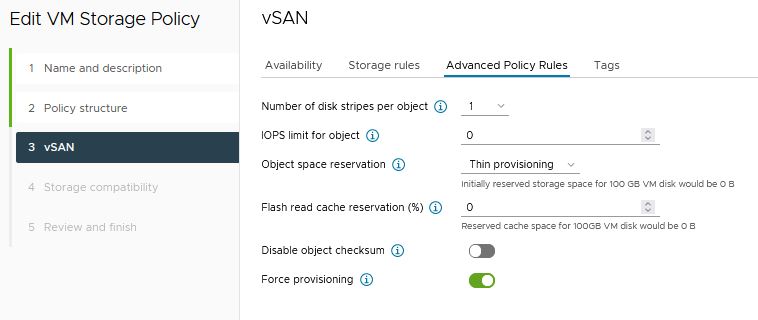

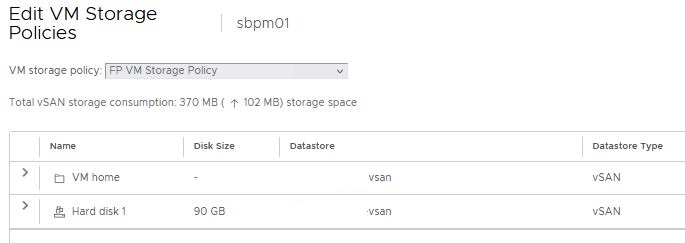

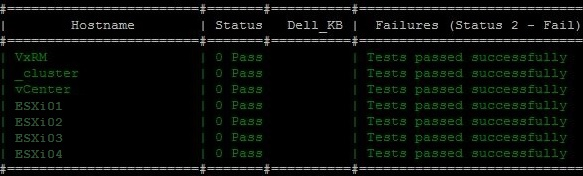

I decided to first complete the cluster setup. After adding some vCenters and importing Content Packs, technically everything worked as designed. The bad performance was still there even after temporarily adding additional memory to the nodes.

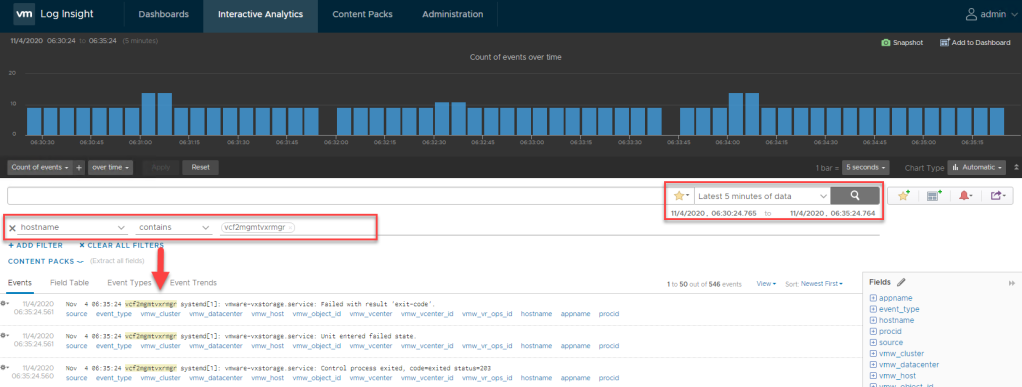

During one of the moments that the performance was decreased I saw something in the lower left-hand corner. There were the following messages displayed:

- Read <vRLI FQDN>

- Transferring data from <vRLI FQDN>

- Connecting to cdn.pendo.io

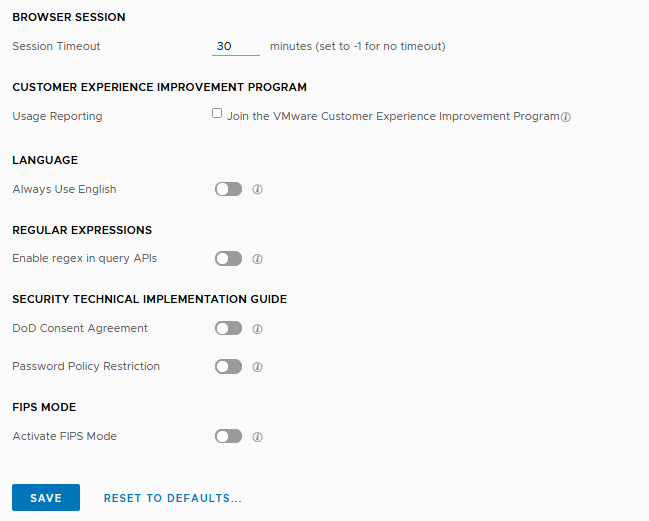

The last bullet was the key to resolve this issue. I searched some information about “cdn.pendo.io” and found this article about “Join or Leave the VMware Customer Experience Improvement Program“. At the bottom of the article is a section “What to do next”

After CEIP is enabled, when a user logs in to vRealize Log Insight, they see a banner at the top of their window that asks whether they want Pendo to collect data based on their interaction with the user interface.

- If the user clicks Accept, Pendo collects their data and sends it to VMware

- If the user clicks Decline, Pendo does not collect their data

In the General Configuration section of the vRLI configuration I deselected the Usage Reporting (Join the VMware Customer Experience Improvement Program). This resolved the issue for me.

Finally, in this case there was no need to join VMware CEIP. So, therefore it is an acceptable solution for me.