After a week in Barcelona for VMworld Europe 2019 I got home with a lot of new information and ideas. This post is about Windows Server Failover Cluster(WSFC) on vSAN and how to backup and restore. WSFC is now fully supported on vSphere 6.7 update3 and for the Dell VxRail users, code 4.7.300.

I started with reading VMware KB74786. It’s a good start and describes the straight forward deployment.

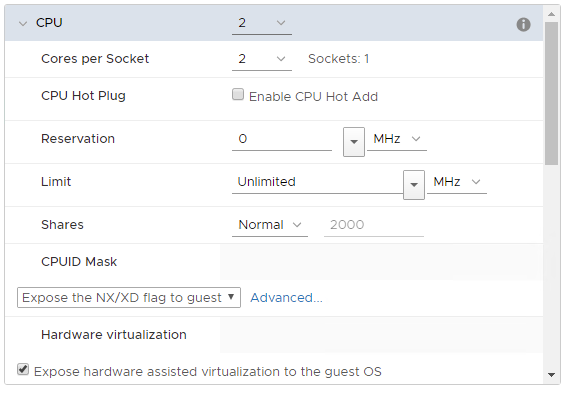

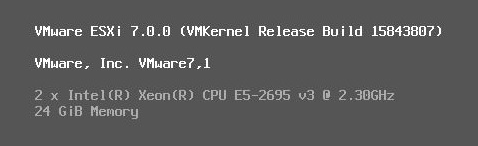

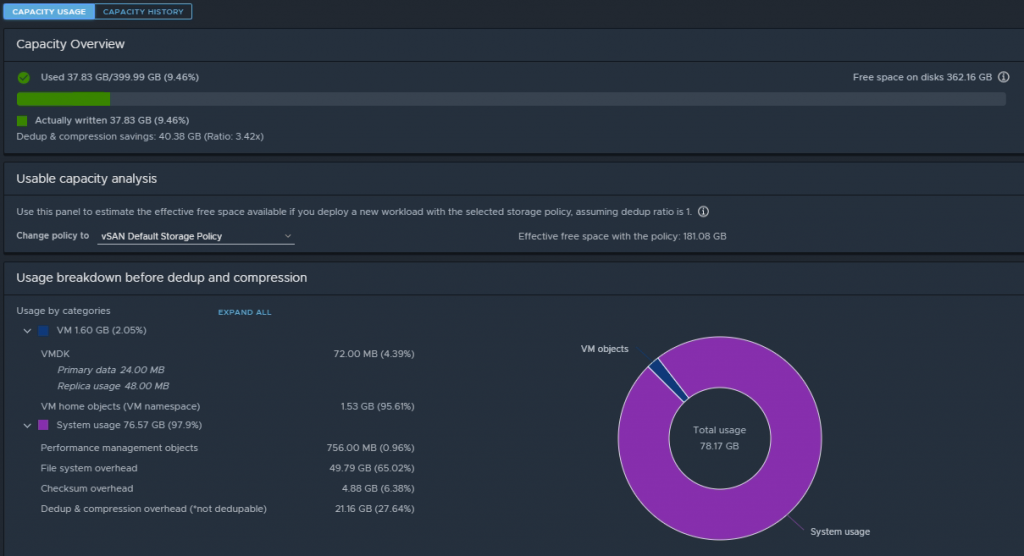

First I have deployed two Windows Server 2016 vm’s in a vSAN cluster. After the initial deployment I have added the failover cluster file server role should on both vm’s. Now it was time to power-off both vm’s and add a Paravirtual SCSI controller with a physical bus sharing to both vm’s.

The next step is reconfiguring vm1 and add two new disks. The first disk is 5GB(Quorum) and the second disk is 50GB(Fileserver data). After reconfigure the vm’s it’s time to power-on them again.

At vm1 I brought the new disks online and format them as NTFS. The next step is crucial before the cluster can be created. If you forgot this steps the disks are not detected in the cluster configuration. Power-off vm2 and add the two existing disks from vm1 to the Paravirtual SCSI controller. Power-on vm2 after reconfiguring.

The creation of the cluster is now straight forward as on physical hardware. You need a cluster-core FQDN and for the fileserver role you need a cluster-cap FQDN. There is a lot of documentation available about configure a Windows failover cluster and otherwise ask your favourite Windows admin :-).

After the deployment I did some failover and failback tests. I was surprised of the speed of the failover. I know there were not many client connections, but I am really impressed.

Backup and restore

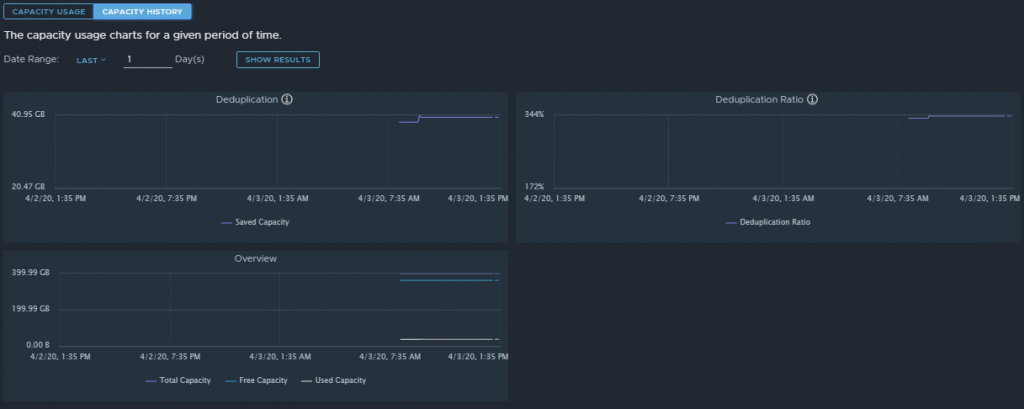

I was already convinced that WSFC on vSAN should work. But how to backup and restore the cluster and the data on it? I was thinking about this because snapshots are unsupported with WSFC on vSAN. See VMware KB74786.

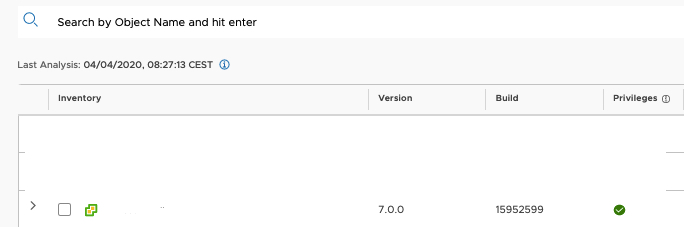

I’ve performed the backup and restore tests in my testlab with Veeam B&R 9.5 update 4b.

The backup and restore test configuration:

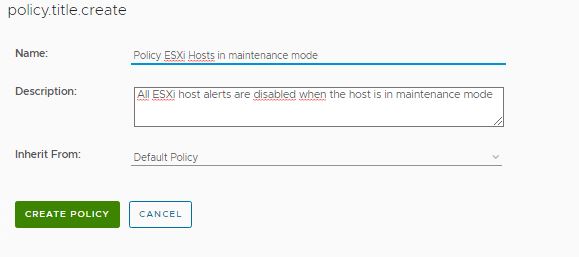

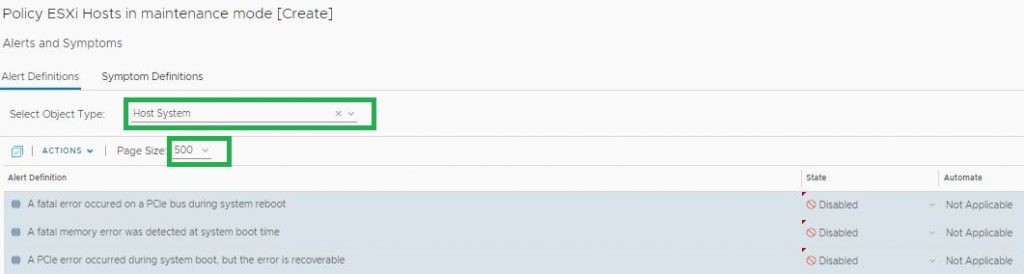

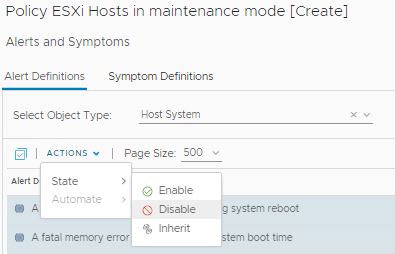

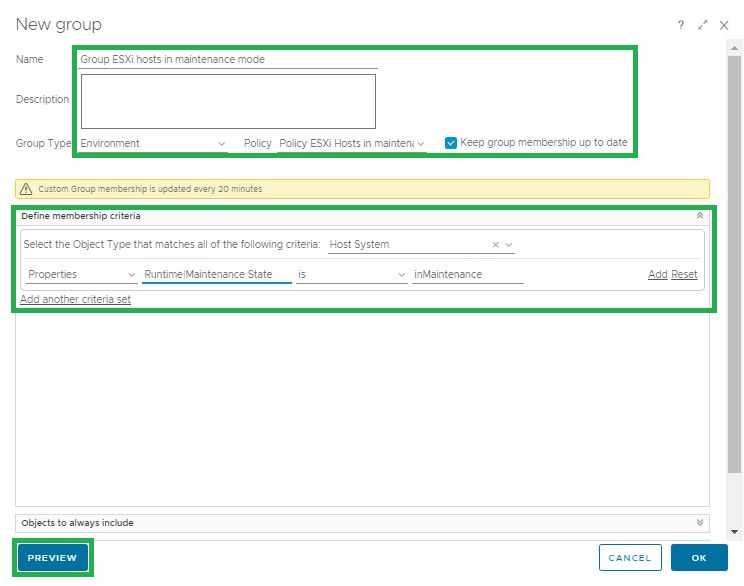

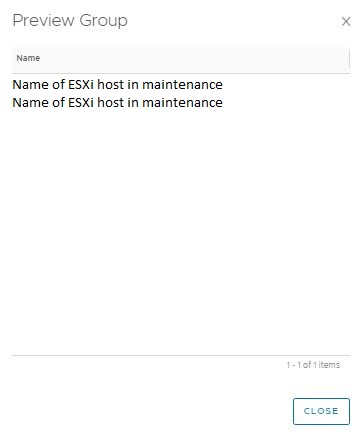

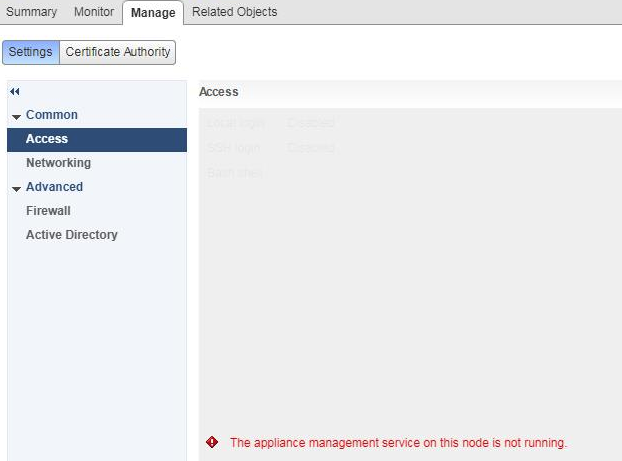

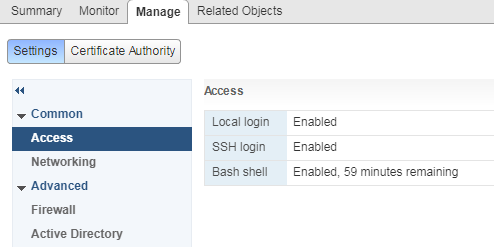

First I have excluded the two vm’s from snapshot backup. The next step is create a new protection group for virtual failover clusters in the inventory view. In the active directory tab, search and add the two nodes and the cluster-core. In the exclusion tab of the new protection group I have unmarked “Exclude All virtual machines”. This is important otherwise the the cluster nodes can’t be added to the protection group. Use a service account with enough permissions and keep the defaults in options tab. After completing the new protection group wizard, the Veeam Agent for Windows will be deployed on the cluster nodes. A reboot is needed. Using Veeam Agent for Windows is the trick in this test. I considered the cluster and nodes as if they were physical. That’s why I used Veeam Agent for Windows. The final step is configure a backup job and backup! After this initial backup I created the recovery iso for both nodes for a bare metal restore(bmr).

I’ve succesful do the following restores from a Veeam Windows ReFS landingzone server.

- File / folder

- Volume restore

- Bare metal restore

Everything went normal. Only a bmr restore with recovery iso is a bit different then bmr a physical server. You have to keep the following in mind. Normally when you create a recovery iso all the network drivers are included in the iso. VMware VMXNET3 driver is not included. I’ve asked Veeam support if it’s possible to add the VMXNET3 driver? It’s not possible. There is an option to load a driver during the startup of the recovery iso. During my test I was able to browse the the driver in the Windows folder: C:\Windows\System32\DriverStore\FileRepository\vmxnet3.inf_amd64_583434891c6e8231. And load it succesful. In the future maybe there are other ways of achieve this.

During the bmr restore I was only able to recover the system volumes only. This by design, I guess, because normally the other cluster node, including the data volumes are online. Finally I’ve succesful tested a recovery of an entire cluster data volume.

Conclusion:

The test deployment WSFC on vSAN helped me better to understand how it works. I see definitely possibilities for WSFC on vSAN.

The backup and restore tests helped me to find an answer how to backup and restore a WSFC on vSAN cluster. The tested backup configuration is supported by Veeam. I logged a case and asked them and they confirmed! Keep in mind that your guest-os is supported. See the Veeam release notes document.

Cheers!