Recently I was asked if it is possible to receive an email notification if a vSAN storage policy with Force Provisioning enabled is applied to a vm. In this blogpost I want to show that this is possible.

Use Case – An administrator wants apply an vSAN storage policy with Force Provisioning enabled to virtual machines beacause of a possible shortage of vSAN storage capacity. In my opinion not a very good idea in a production environment!

Goal – Get an email notification when a vSAN storage policy with Force Provisioning enabled is applied to an vm. There is also the wish to make this visable in a dashboard.

Solution – With a bit of reverse engineering and vRealize Log Insight (vRLI) it’s possible to achieve this.

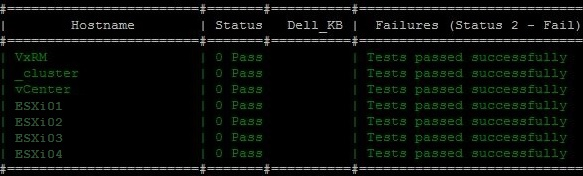

Setup lab – VMware vSphere 7.0 Update 3a vSAN cluster and vRLI 8.6.

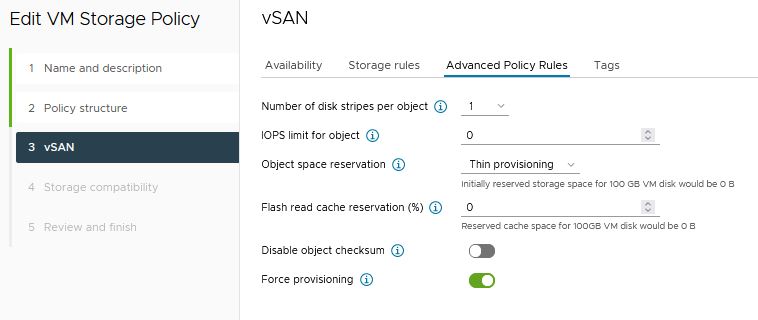

The first step is create a storage policy with Force Provisioning enabled. We name this policy “FP VM Storage Policy”

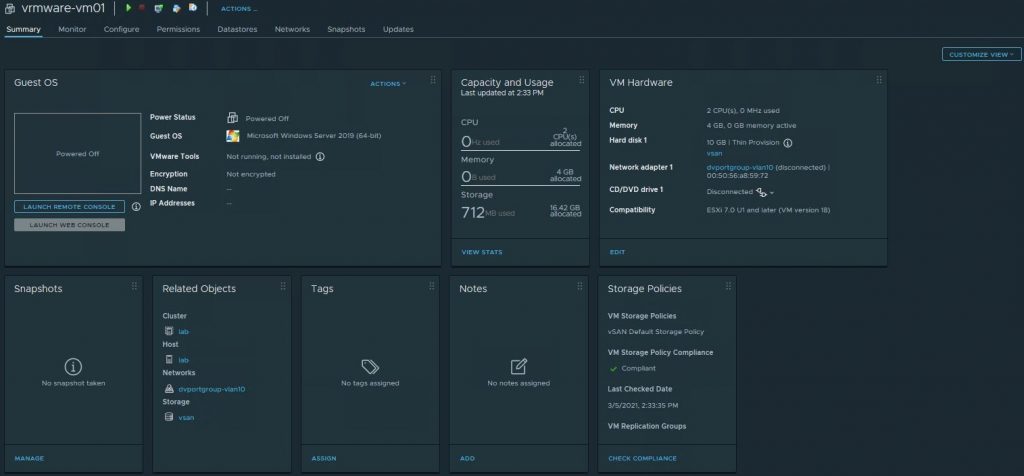

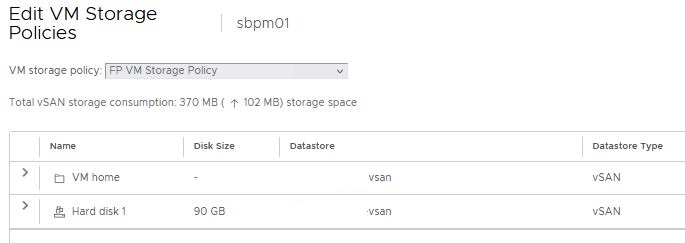

We need to apply the new storage policy “FP VM Storage Policy” to our test vm “sbpm01”

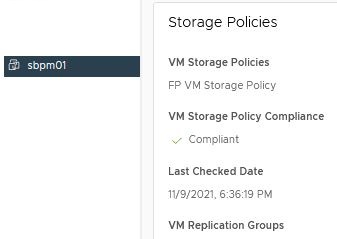

The policy is successful applied to the vm “sbpm01”.

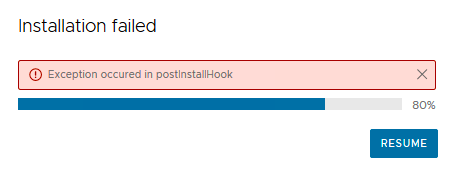

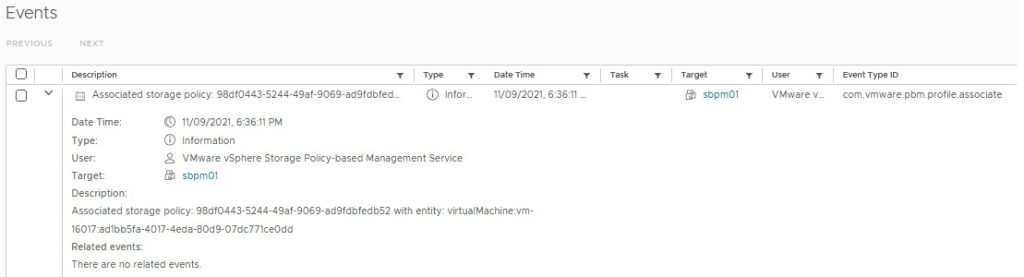

Now we need some reverse engineering because it’s not possible to grep the name of the storage policy in vRLI. We move to the sbpm01 events in vCenter.

Note the following two details:

- Event Type ID: com.vmware.pbm.profile.associate

- Associated storage policy: 98df0443-5244-49af-9069-ad9fdbfedb52

This is the information we need to created a new filter in vRLI Interactive Analytics.

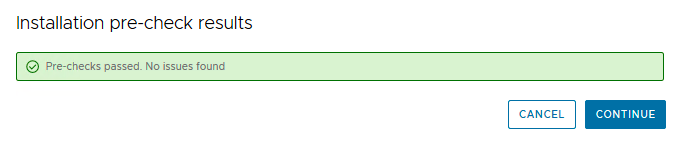

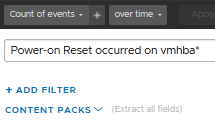

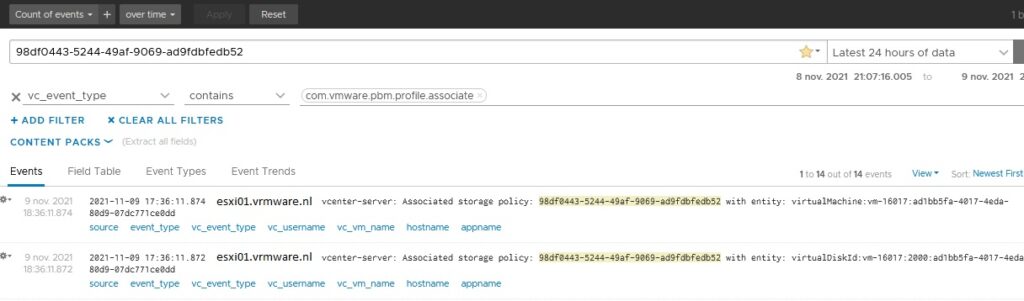

Add the associated storage policy id “98df0443-5244-49af-9069-ad9fdbfedb52″ to the text field and add an extra filter (+ ADD FILTER). Choose from the pull down menu “vc_event_type” contains com.vmware.pbm.profile.associate. Choose a time window. In our example I choose “Latest 24 hours of data”. You can also choose here the last hour or last 5 minutes of data. It depends on the time you applied the policy and when you search in vRLI.

In de results above you don’t see the name of the vm with the applied storage policy. In the last image above here you see on the right of Events the Field Table section. Select Field Table. Search for the row with name vc_vm_name. Below here is the vm friendly name displayed of the vm with new applied storage policy.

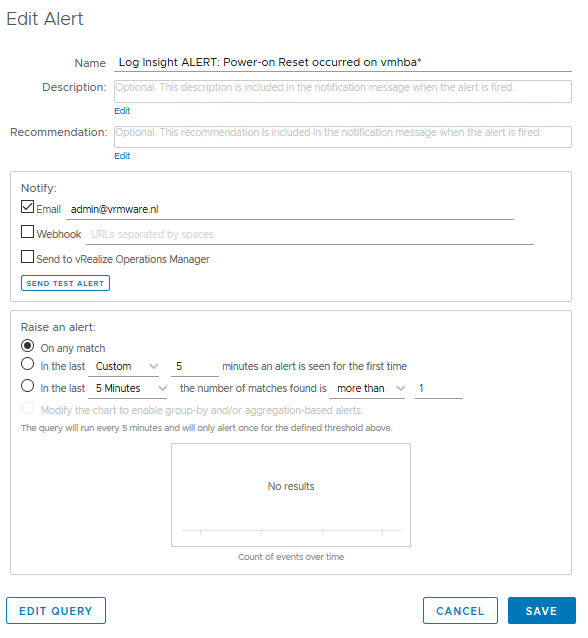

Finally you want a email notification and a dashboard. I am not going to explain here how to create an email notification and a dashboard. This can be done in vRLI at the same way you normally create notifications and dashboards. Press the icon (1) to create a email notification and press the icon (2) to create a dashboard.

If you want receive an email notification if the vm get another storage policy applied. Then you should create another filter including the following two details.

- Event Type ID: com.vmware.pbm.profile.dissociated

- Associated storage policy: 98df0443-5244-49af-9069-ad9fdbfedb52

Conclusion:

In this blog post I wanted to demonstrate that it is possible with vRLI to receive an email notification if a vm has a storage policy applied where Force Provisioning is enabled. A disadvantage is that if the vm gets a different storage policy with different settings, this email notification is no longer valid, because the notifications are based on this specific storage policy id.

I have shown that it is works but as far as I believe it is not a solution for a production environment.